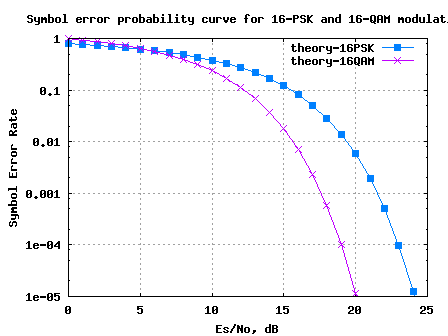

Comparing 16PSK vs 16QAM for symbol error rate

In two previous posts, we have derived theoretical symbol error rate for 16-QAM and 16-PSK modulation schemes. The links are: (a) Symbol error rate for 16-PSK (b) Symbol error rate for 16-QAM Given that we are transmitting the same number of constellation points in both 16-PSK and 16-QAM, let us try to understand the better…