Closed form solution for linear regression

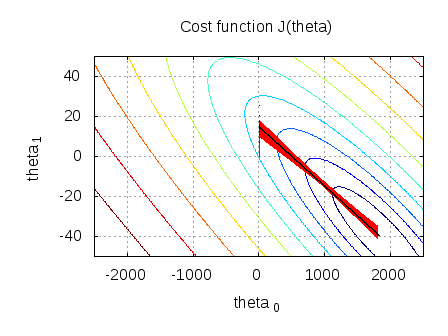

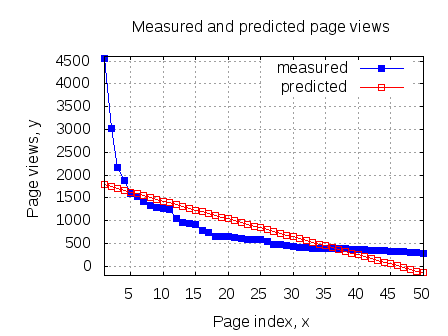

In the previous post on Batch Gradient Descent and Stochastic Gradient Descent, we looked at two iterative methods for finding the parameter vector which minimizes the square of the error between the predicted value and the actual output for all values in the training set. A closed form solution for finding the parameter vector is possible, and in this post…