GATE-2012 ECE Q24 (math)

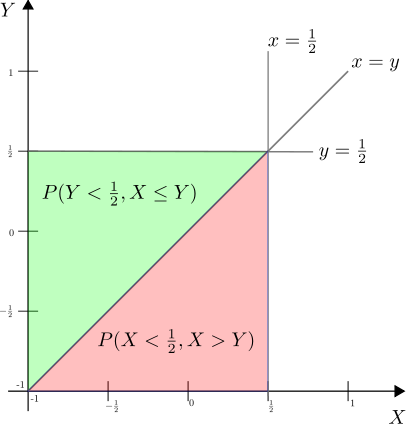

Question 24 on math from GATE (Graduate Aptitude Test in Engineering) 2012 Electronics and Communication Engineering paper. Q24. Two independent random variables X and Y are uniformly distributed in the interval [-1, 1]. The probability that max[X,Y] is less than 1/2 is (A) 3/4 (B) 9/16 (C) 1/4 (D) 2/3