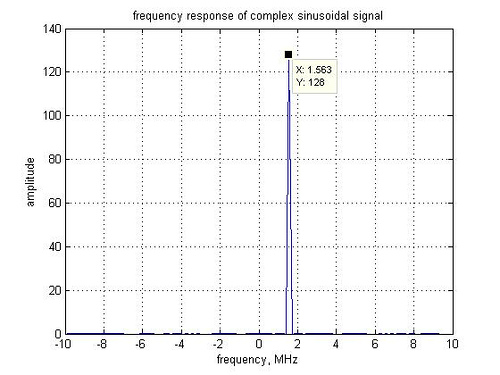

Interpreting the output of fft() operation in Matlab

It might be interesting to interpret the output of the fft() function in Matlab. Consider the following simple examples. fsMHz = 20; % sampling frequency fcMHz = 1.5625; % signal frequency N = 128; % fft size % generating the time domain signal x1T = exp(j*2*pi*fcMHz*[0:N-1]/fsMHz); x1F = fft(x1T,N); % 128 pt FFT figure; plot([-N/2:N/2-1]*fsMHz/N,fftshift(abs(x1F)))…