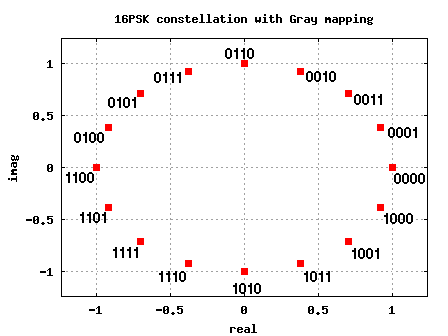

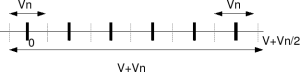

Gray code to Binary conversion for PSK and PAM

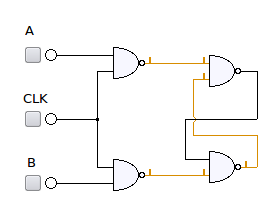

Given that we have discussed Binary to Gray code conversion, let us discuss the Gray to BInary conversion. Conversion from Gray code to natural Binary Let be the equivalent Gray code for an bit binary number with representing the index of the bit. 1. For , i.e, the most significant bit (MSB) of the Gray…