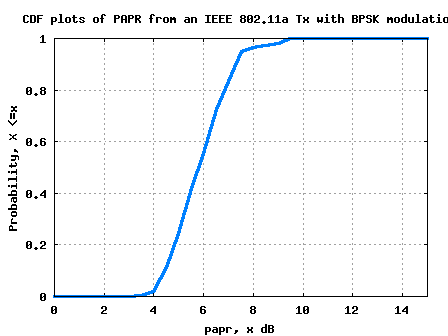

Peak to Average Power Ratio for OFDM

Let us try to understand peak to average power ratio (PAPR) and its typical value in an OFDM system specified per IEEE 802.11a specifications. What is PAPR? The peak to average power ratio for a signal is defined as , where corresponds to the conjugate operator. Expressing in deciBels, .