Happy Birthday – dspLog

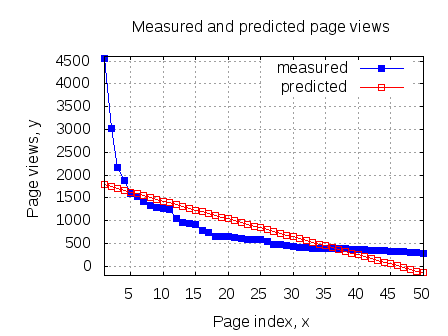

An important milestone for the dspLog happened on Oct 21st 2008. On this day last year, the blog migrated from the Blogger platform to the independently hosted platform at www.dsplog.com ! Belated birthday wishes for the blog!!! 🙂 Looking back, the first year was satisfying – both in terms of contents and traffic. We started…