An earlier post we discussed hard decision decoding for a Hamming (7,4) code and simulated the the bit error rate. In this post, let us focus on the soft decision decoding for the Hamming (7,4) code, and quantify the bounds in the performance gain.

Hamming (7,4) codes

With a Hamming code, we have 4 information bits and we need to add 3 parity bits to form the 7 coded bits.

The coding operation can be denoted in matrix algebra as follows:

where,

is the message sequence of dimension

,

is the coding matrix of dimension

,

is the coded sequence of dimension

.

Using the example provided in chapter eight (example 8.1-1) of Digital Communications by John Proakis , let the coding matrix be,

.

This matrix can be thought of as,

,

where,

is a

identity matrix and

is a

the parity check matrix.

Since an identity matrix, the first

coded bits are identical to source message bits and the remaining

bits form the parity check matrix.

This type of code matrix where the raw message bits are send as is is called systematic code.

Assuming that the message sequence is , then the coded output sequence is :

, where

,

,

.

The operator denotes exclusive-OR (XOR) operator.

The matrix of valid coded sequence of dimension

| Sl No | m0 | m1 | m2 | m3 | p0 | p1 | p2 |

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 1 | 0 | 1 | 1 |

| 2 | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

| 3 | 0 | 0 | 1 | 1 | 1 | 0 | 1 |

| 4 | 0 | 1 | 0 | 0 | 1 | 1 | 1 |

| 5 | 0 | 1 | 0 | 1 | 1 | 0 | 0 |

| 6 | 0 | 1 | 1 | 0 | 0 | 0 | 1 |

| 7 | 0 | 1 | 1 | 1 | 0 | 1 | 0 |

| 8 | 1 | 0 | 0 | 0 | 1 | 0 | 1 |

| 9 | 1 | 0 | 0 | 1 | 1 | 1 | 0 |

| 10 | 1 | 0 | 1 | 0 | 0 | 1 | 1 |

| 11 | 1 | 0 | 1 | 1 | 0 | 0 | 0 |

| 12 | 1 | 1 | 0 | 0 | 0 | 1 | 0 |

| 13 | 1 | 1 | 0 | 1 | 0 | 0 | 1 |

| 14 | 1 | 1 | 1 | 0 | 1 | 0 | 0 |

| 15 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Table: Coded output sequence for all possible input sequence

Minimum distance

Hamming distance computes the number of differing positions when comparing two code words. For the coded output sequence listed in the table above, we can see that the minimum separation between a pair of code words is 3.

If an error of weight occurs, it is possible to transform one code word to another valid code word and the error cannot be detected. So, the number of errors which can be detected is

.

To determine the error correction capability, let us visualize that we can have valid code words from possible

values. If each code word is visualized as a sphere of radius

, then the largest value of

which does not result in overlap between the sphere is,

where,

is the the largest integer in

.

Any code word that lies with in the sphere is decoded into the valid code word at the center of the sphere.

So the error correction capability of code with distance is

.

In our example, as , we can correct up-to 1 error.

Soft decision decoding

Let the received code word be,

, where

and

is the transmit code word,

is the additive white Gaussian noise with mean

and variance

and

form the elements of the code word.

Given that there are known code words, the goal is to find correlation of received vector

with each of the valid code words.

The correlation vector is,

where,

is the received coded sequence of dimension

,

is the matrix of valid code words sequence of dimension

and

is the vector of correlation value for each valid code word and is of dimension

.

From the correlation values, the index of the location where

is maximized corresponds to the maximum likelihood transmit code word.

Note:

The term is to given weights for the code words i.e.0 is given a weight -1, 1 is given a weight 1.

Hard decision decoding

To recap the discussion from the previous post, the hard decision decoding is done using parity check matrix .

Let the system model be,

, where

is the received code word of dimension

,

is the raw message bits of dimension

,

is the raw message bits

,

is the error locations of dimension

.

Multiplying the received code word with the parity check matrix,

.

The term is called the syndrome of the error pattern and is of dimension

. As the term

, the syndrome is affected only by the error sequence.

Assuming that the error hits only one bit,

a) There are can be possible error locations.

b) If the syndrome is 0, then it means that there is no errors.

c) The value of syndrome takes one among the valid 7 non-zero values. From the value of syndrome we can figure out which bit in the coded sequence is in error and correct it.

Note :

a) If we have more than one error location, then also the syndrome will fall into one of the 8 valid syndrome sequence and hence cannot be corrected.

b) The chosen Hamming (7,4) coding matrix , the dual code

is,

.

It can be seen that modulo-2 multiplication of the coding matrix with the transpose of the dual code matrix

is all zeros i.e

.

Asymptotic Coding gains

From Chapter 12.2.1 and Chapter 12.2.1 of Digital Communication: Third Edition, by John R. Barry, Edward A. Lee, David G. Messerschmitt, the asymptotic coding gain with soft decision decoding and hard decision decoding is given as,

.

where,

is the coding rate,

is the minimum distance between the code words and

is the maximum number of errors which can be corrected.

Simulation Model

The Matlab/Octave script performs the following

(a) Generate random binary sequence of 0’s and 1’s.

(b) Group them into four bits, add three parity bits and convert them to 7 coded bits using Hamming (7,4) systematic code

(c) Add White Gaussian Noise

(d) Perform hard decision decoding – compute the error syndrome for groups of 7 bits, correct the single bit errors

(e) Perform soft decision decoding

(f) Count the number of errors for both hard decision and soft decision decoding

(g) Repeat for multiple values of and plot the simulation results.

Click here to download Matlab/Octave script for computing BER for BPSK in Hamming (7,4) code with soft and hard decision decoding.

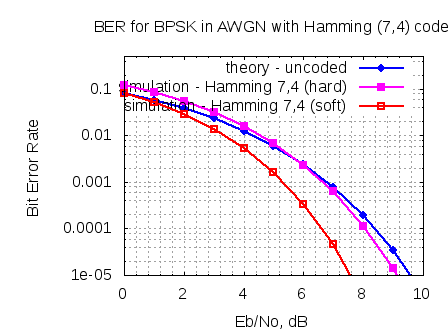

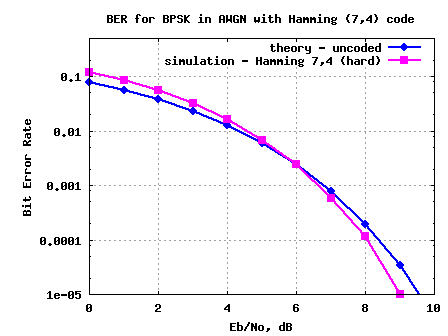

Figure : BER plot for Hamming (7,4) code with soft and hard decision decoding

Observations

a) At bit error rate close to , can see that the coding gains corresponding to hard and soft decision decoding is tending towards the asymptotic coding gain numbers.

References

Digital Communications by John Proakis

Digital Communication: Third Edition, by John R. Barry, Edward A. Lee, David G. Messerschmitt

Can you help me in soft decision decoding(without any channel code)?

what is the purpose of the following line.

[val idx] = max(cipSoftM*(2*c_vec.’-1),[],2)

@raja: it is finding the correlation between the received and the valid code word sequences

hi krishna

can you helf me get bitidx for hamming (15,11)

i am not able to figure it out

@arsh: The vector bitIdx stores the bit to correct based on the syndrome value.

For eg, if

– syndrome is 5, the bit to correct is 1,

– syndrome is 7, the bit to correct is 2,

– syndrome is 6, the bit to correct is 3, and so on.

You can find bit more discussion on the syndrome @

https://dsplog.com/2009/09/29/hamming-74-code-with-hard-decision-decoding/

Hope you can use this to expand to Hamming(15,11) coding

Hello Krishna,

in the code of HDD hamming simulation,

1) I understad how you find bitldx

bitIdx = [ 7 7 4 7 1 3 2].’;

I think that bitldx(2) should equal 6 not 7, and bitldx(4) should equal 5 not 7 as bellow:

bitIdx = [ 7 6 4 5 1 3 2].’;

why did you do that

2) converting the 0 decimal value of the syndrom to one will correct codewords that have no error(i.e, you creat not existed errors), why this does not affect the simulation ?

Thanks

@yzed:

1. The bitIdx stores the bit in error corresponding to the computed syndrome

For eg, for syndrome of 5, bit1 is in error; syndrome of 4, bit4 is in error and so on..

Please check out the post with hard decision decoding for bit more details

https://dsplog.com/2009/09/29/hamming-74-code-with-hard-decision-decoding/

2. Converting from 0 to 1 is because matlab/octave array indices start with 1

(in C and some other programming languages, the array index starts at 0).

Really interesting and informative blog.

Thanks.

Hi Krishna,

can you please help me how to solve the below problem with explanation.

A convolutional code is described by G=[1 0 1 1;

0 1 1 0;

1 1 0 1;

1 1 1 1]

a) If k=1, determine the output of the encoder when the input sequence is given as: 1 1 0 0 1 0 1 0 1 0 1 0 0 1 0 1 1 1 1 0 1 0 1 1 1 1 1 0 1 0

b) Repeat the part (a) when k=2.

Thank you

@pazmergo: Should be straight forward for you to compute. Try to look for

– generator polynomial

– coding rate

Do a mod2 convolution to get the output sequence.

Nice Article, Krishna.

Wanted to know a bit about how the asymptotic coding gains are derived. Would it be possible to provide some outline of the proof?

In practice, we often compare systems on an SNR scale. Two schemes giving identical performance on an Eb/No scale can give different performance at the same SNR. For example repetition coding on AWGN channels. In Eb/No terms there is no gain, but at the same SNR the system with repetition coding would result in a lower BER. (SNR defined as the power of the signal in dBm divided by the power of the noise in dBm.)

SNR = Es/No*Rs/W. Say Rs/W is kept constant. With a rate R code (R Eb/No uncoded. Hence the coding gains here will in fact result in another 2.43 dB improvement in performance in terms of SNR. The penalty of course is either decreased data rate or increased bandwidth (reduced spectral efficiency either way). Do you agree?

An interesting extension to this work could be to consider fading channels. Say a fully interleaved Rayleigh fading channel. The diversity gain that the receiver gets is a function of whether the decision is soft or hard. With hard decision the slope of the BER curve is less steep. May be interesting to quantify that in terms of the minimum distance.

@Vineet: Thanks. My replies:

a) Saw the derivation for asymptotic coding gain in the textbooks – but did not digest well enough to add them in this post. Will add to the TODO list.

b) The relation between Bit to Noise ratio Eb/N0, Symbol to Noise Ratio Es/N0, Signal to noise ratio SNR and expressing them in dBm levels is slightly tricky. I think I need to write it down carefully – again hopefully will end up writing another post on this topic.

c) Hmm… interleaving is a topic which I have not explored in this blog. Will start on that.

There is an error in your code.

File: script_bpsk_ber_awgn_hamming_7_4_code_soft_hard_decoding.m Line:

69 Column: 45

@Xia: Thanks. I tried running the code in my local Octave v3.0.5. Ran with out errors.

Am I missing something?

I run it in Matlab. I think this line has some problems.

ipHat_soft = base2dec(dec2bin(idx-1,4).'(:),2).’;

Matlab says “Unbalanced or unexpected parenthesis or bracket.”

I do not know what is the meaning of “(:)” in this line.

@Xia: Will try in Matlab.

The goal of (:) is to convert the matrix into a vector.

Try breaking that line into two parts, for eg,

tmp = dec2bin(idx-1,4).’;

tmp = tmp(:);

ipHat_soft = base2dec(tmp,2).’;

helps?

I had get the same problem. After breaking the line into two parts, it works now.

Thanks a lot, Krishna!